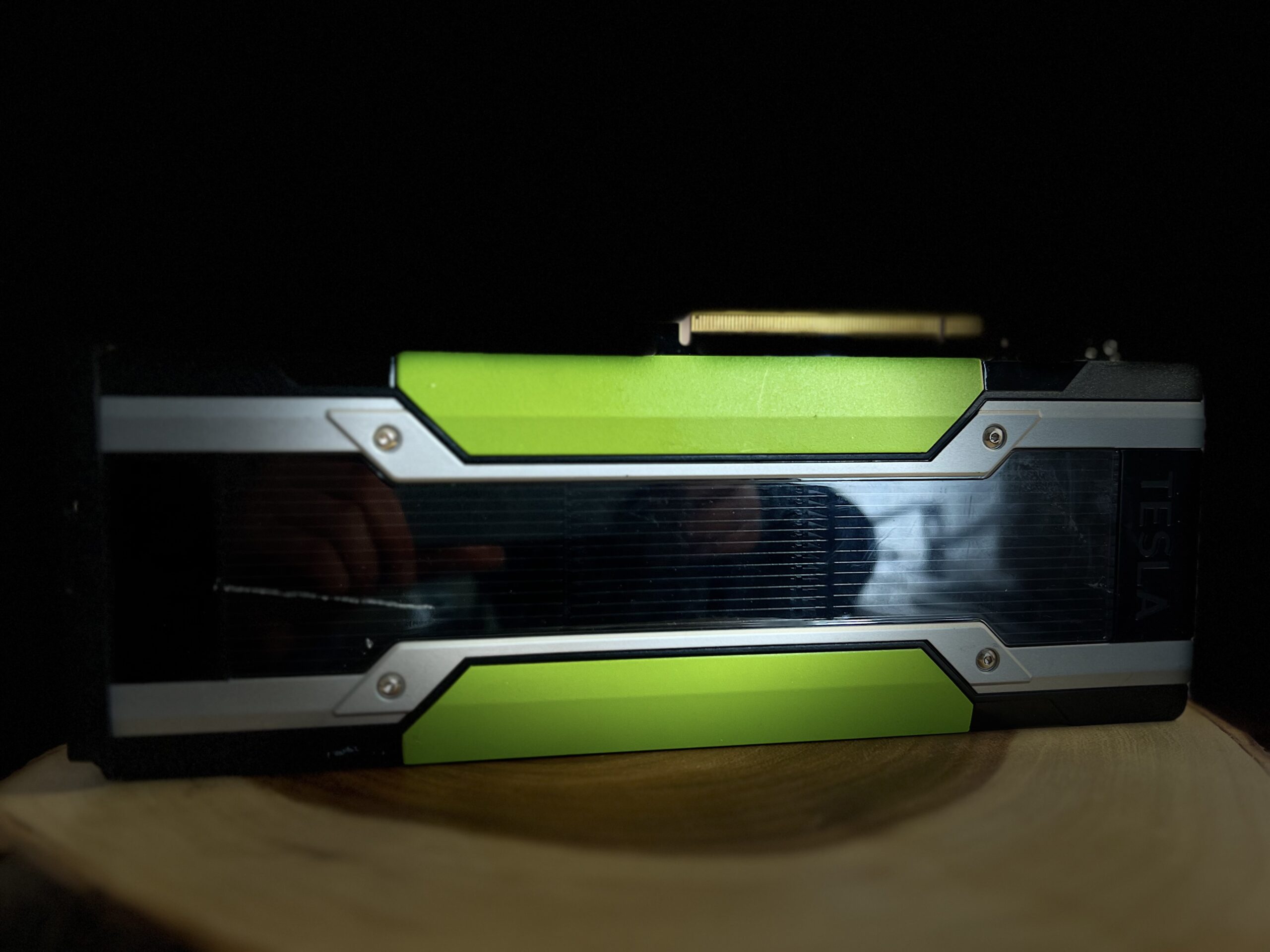

I recently began working with LLM’s (like the stuff with Chat GPT, but you run it on your computer). And to be honest with you, I realize the staggering costs required to take part in even running an LLM, let alone figuring out why you would do so in the first place. It’s just something that runs incredibly slow if you try to run some LLM on your laptop that doesn’t have some desktop GPU built in. And so I recently began taking a more serious look into older GPUs, such as the Tesla Series from NVIDIA, that are now sold for incredibly low prices given that they are incredibly difficult to install or use. That’s because they were designed to be installed in specifically disjoined server racks that simply churned numbers all day.

In case you want to simply dive into a demo, you can chat with my AI that is running on the Tesla P40 currently further below in this page OR here.

TMI? Go talk about it with my ChatAI

Facts and Info

Connecting the NVIDIA Tesla GPU to my External GPU Adapter

I wanted to see if I could buy a GPU without building an entire desktop. After days of mustering methods to try to get the NVIDIA Tesla GPU to work with my External GPU adapter (designed for Gaming GPUs), I finally got things working that exceeded my expectations.

As a quick way to provide details on how I connected my Thunderbolt-enabled MacBook Pro to the Tesla Series (K80 and P40) GPU, I did the following configuration steps after trying many different ways. After a bit of struggle, I finally got a setup recognizing the NVIDIA TESLA GPU through the USB C Thunderbolt 3 connection via an external GPU adapter via the AKITO Node Pro.

Operation System Setup

Windows 10 was the only OS that proved feasible. I chose the build version 1903v1, which can be downloaded via archive.org. I tried using later versions of Windows, but it gave me the dreaded Code 12 error. In terms of feasibility on MacOS, MacOS does not support NVIDIA GPUs. In terms of Linux, I tried using Ubuntu 20.04 but could not get the Thunderbolt connection to recognize the GPU fully (I do think it’s feasible because I was able to see it as a device but simply couldn’t find a way to connect it properly as a GPU display device, the device was recognized as some USB peripheral).

Power Connection Setup

The TESLA GPU is connected via the Akito Node Pro Thunderbolt 3 External PCIE GPU Case (which offers a standard 16-pin PCIE slot). Regarding power connectivity, the TESLA GPU necessitates a non-traditional 8-PIN ATX input. However, this minor inconvenience can be easily rectified by employing an ATX 8PIN to 8 PIN PCIE power connector. By connecting the 8 PIN PCI-E to the Power Supply Unit and subsequently linking the ATX 8 PIN to the NVIDIA TESLA, the power requirements of the GPU can be adequately met. At a minimum, the power cable must provide 75 watts of power (assuming that the 16 PIN PCIE interface provides another 175 watts), given that the NVIDIA TESLA GPUs require a minimal 150 watts to function. In my uses, I have not seen it use more than 150W.

- Notes: In my setup, the PCIE to ATX Power cable that I utilize to power the Tesla provides 75 Watts of power to the Tesla GPU. Additionally, the AKITO Node Pro 16 PIN PCIE that interfaces with the Tesla GPU contributes an extra 175 Watts of power to the GPU.

I changed the default power connection setup that the Node Pro uses by doing the following:

I used a very basic power connector, a 8 PIN ATX to 6+2 PIN PCIE power cable. The 8 PIN ATX connects into the NVIDIA Tesla GPU, while the PCIE end connects to a 8 PIN PCIE power output on a PSU.

- The PSU that the AKITO Node uses, has two 8-PIN PCIE outputs and is both used as the default configuration with connectors PCIE connectors that are intended to provide power to a typical GPU. Obviously, the configuration does not work with the Tesla GPU given that the TESLA GPU requires power via an 8-PIN ATX power cable.

- I replaced one of the PCIE outputs, with my PCIE to ATX power cable. to allow the Tesla GPU to connect to the PSU through its ATX input.

- I did not utilize any power splitters since I did not have a need to use more power than the minimal that my connector provides. The Tesla series GPU can take up to 300 Watts of Power.

- The PCIE to ATX Power cable that I use to power the Tesla only provides 75 Watts of power to the Tesla GPU.

- Fortunately, the AKITO Node Pro 16 PIN PCIE that interfaces with the Tesla GPU provides an additional 75 Watts of power to the GPU.

- With the configuration, I am able to power the Tesla GPU with 250 Watts of power, the minimal amount of power required for the GPU to run and in my case is more than sufficient for running inference from language models or training language models.

Thunderbolt Connection to MacBook Setup

The GPU is connected to the MacBook by hot-plugging the AKITO Node Pro. In general, with EGPU setups using MacBooks, the connection is only possible via hot-plugging the GPU.

This means that the EGPU Connection must only occur after the MacBook Pro is fully booted into Windows. In my case, I simply boot up my MacBook through Bootcamp, enter Windows 10, and log in to my Windows User Account as normal. However, I ensure that no other devices are connected to the MacBook via the USB C/Thunderbolt ports, as this may cause issues with the GPU connecting to the MacBook. (The GPU Connection requires a lot of resources that can be affected by other USB connections.)

Once I finally got Windows 10 to recognize the connection as a proper display device, I followed standard instructions for installing NVIDIA’s CUDA Libraries and Drivers. I then got PyTorch to work. As I said before, I found the performance remarkably similar to my GPU Server, which uses a newer NVIDIA Titan RTX.

When leveraged through a Thunderbolt external eGPU setup, the Nvidia TESLA P40 displays performance comparable to desktops driven by present-day GPUs. Be mindful, however, that eGPU connections via Thunderbolt C possess less than half the speed noted in native PCIE connections to the motherboard. My engagement with NVIDIA’s GPU involved training various language models, and in addition, I effectively fine-tuned stable diffusion models. Being a writer, I ascertained a particular interest in the language models. Initially perceiving AI as another contender in the field of writing or coding, my perspective shifted upon appreciating the authentic competence of the burgeoning generative AI movement.

I can sum this up in a simple rule I’ve realized when it comes to generative AI (and machine learning-based programs in general), and hence, I find myself compelled to share a profound realization regarding the inherent limitations and potential of Generative AI:

In its most sublime form, it does not merely serve as a reflection or approximation of the artist upon whose work it is modeled, but rather, it exists as a mere shadow of the artist’s original creation.

Or if rather explained in logic,

This reflection, no matter how abstract, can never truly attain the full magnitude of the original artist’s capacity. This limitation arises from the fact that the AI’s decisions are predicated on a simplified representation of the artist’s work, which it employs as a form of abstraction to generate further creations. This process, however, necessitates an initial spark of inspiration from which to germinate.{ f(a)+{\frac{f'(a)}{1!}}(x-a)+{\frac {f''(a)}{2!}}(x-a)^{2}+{\frac {f'''(a)}{3!}}(x-a)^{3}+c\dots ,}

As a passionate writer, I have come to realize that I possess the ability to further enhance the capabilities of generative AI, rather than being pushed by it. This realization has not only provided me with a remarkable avenue for personal growth and development as a writer, but has also led me to devise highly specialized language models that are incredibly insightful in responding to specific data inputs.

In recent times, you may have noticed a surge in companies adopting “AI” chat assistants. Regrettably, these tools often fall short, providing only general responses and failing to address matters specific to their intended purpose as a business’s support agent. This is primarily because training a language model to demonstrate actual usefulness towards a specialized data set is a far more complex and involved process than simply feeding as much data as possible to create a giant language model, such as in the case of ChatGPT. While these large language models can respond to general queries, their performance in terms of specialized knowledge is less than satisfactory, and their understanding of how to support a company’s internal business services is virtually non-existent.

However, I am pleased to share that, as demonstrated by my examples, I may have discovered a workflow that offers a method for specialized training of language models. I hope to compile a guide on this subject soon, so please stay tuned for further updates.

State of GPU-Enabled Hosting Services and the Power of Choice

The surge in demand for GPU-intensive computational power has sparked a fascinating trend among cloud service providers. They are now offering GPU “enabled” servers, a boon for those who may not have such high-powered machines at their disposal. As someone who has extensively utilized every major cloud service provider – Google, IBM, AWS, Oracle, and even GoDaddy- these servers typically cost around a dollar per hour to run. If you crunch the numbers, that’s nearly 2000 dollars a month for a GPU “enabled” server from any cloud provider. So, what kind of GPUs do these cloud providers offer for a dollar per hour? Interestingly, they’re the same kind we’re discussing in this post. To put things into perspective, with 2000 dollars, you could purchase approximately 10-20 Tesla P series GPUs.

This isn’t to discourage cloud providers’ use but to highlight the power of choice and the growth potential. With the same investment, you could build your server with the latest NVIDIA GPU offerings, such as the NVIDIA Ampere series GPUs. These GPUs offer significant advancements in quantized calculations. In the ever-evolving landscape of machine learning, it’s exciting to see how these developments will shape the future of computation and data processing.

Following the adjustment, the intricacy of the freshly polished model surged considerably. This score, which rendered even myself in a state of profound wonder, is now verging on the advancement of the forthcoming GPT-4-turbo model from OpenAI.

| Model | Dataset | Perplexity |

|---|---|---|

| liulock_Llama-3-8b-instruct_merged* | spicyborostrain-textconverted | 7.96 |

| liulock_jondurbin_airoboros-l2-13b-420-merged | spicyborostrain-textconverted | 2.76 |

| liulock_jondurbin_airoboros-l2-13b-420-merged | wikitext | 6.32 |

| liulock_airoboros_13b_v4_main | spicyborostrain-textconverted | 1119.44 |

| liulock_airoboros_13b_v4_main | wikitext | 3315.94 |

*Please note that Synthethic’s has been updated to utilize a smaller model at 8b input parameters and is now at version 7! To read more about this update, head to linkedinliu.com, where it is discussed.

Chat with Ziping’s Chat Assistant now Easily via Texts

In the year that has passed since April 2023, you may have observed my unwavering dedication to the complex task of dataset refinement. As we enter March of 2024, I am excited to unveil the fifth edition of my chat assistant, meticulously fine-tuned using a dataset extracted from my extensive writings. This is not a compilation of a few academic essays but a comprehensive corpus equivalent to over a thousand essays, spanning various topics and categories. The sheer volume of my written work, sufficient to form a dataset, has been instrumental in developing a competent language model.

The task of dataset creation is a formidable challenge, often underestimated by many. It forms a significant part of what enables a language model to generate coherent inferences, accounting for at least half the battle in machine learning. Machine learning leverages both probability and statistics to construct a model. Probability, a mathematical discipline, deals with the likelihood of events, while statistics focuses on discerning patterns and relationships in data. Together, they lay the groundwork for a language model to generate logical inferences.

The quandary here is that probability is the implementation of statistics, while statistics is the implementation of probability. This means that while probability is a mathematical concept that can be used to construct machine learning algorithms, statistics is more of an art that requires a deep understanding of the domain to construct a successful model. This is why it is so challenging to construct an effective language model. In machine learning, they apply both to form a model or function to provide generational abilities from some input. Hence, it’s quite obvious that even in the most perfect form of outcome possible for a trained model, it will still fail, given that it is based on a cyclical relation with no actual form of beginning or start.

SMS Texting with Ziping’s AI Chat Assistant is inactive.

Easily chat with Synthetics Chat AI from your phone via SMS texts at 9792013000 or right below as a web application! SMS Texting is undergoing maintenance and re-developmental efforts. To chat with Synthetics, utilize its web interface right below, or it is also available here as a dedicated page.

This AI, nicked-named “synthethics,” responds and emulates Ziping’s written works but does not represent Ziping’s official views or positions. It is provided for educational and/or research purposes only. Learn more here.

Synthetics Chat AI no longer uses an external GPU setup. I used an external GPU to test the Tesla GPUs quickly. However, Tesla GPUs are complicated to use since they rely on passive cooling within an enclosed area with active airflow and cooling. Additionally, they may not support the latest versions of Cuda, which could become problematic due to the need to implement workarounds to compensate for any reliance on newer forms of data quantization or computations that the Tesla GPU cannot handle. One issue I recently overcame was developing a DIY cooling enclosure for the Tesla GPU, given that I use it in a normal desktop chassis and not the intended rackmount servers it was designed for. And when you start combining dozens of these GPUs using these large rackmount servers, you begin treading towards building a computer that is far beyond any consumer-accessible hardware, commonly known as a supercomputer.

At present, Syntheethics AI runs on a server at home that utilizes the Tesla P40 GPU, as well as a medium-tier CPU (Ryzen 2400GE). It can handle a 13-billion-parameter model and generate inferences at 8 tokens per second using 4-bit quantization. The Tesla P40 also shines as a highly effective secondary GPU when paired with a more powerful or newer GPU, given that it allows for incredibly fast inferences of much larger models than if only using the more power of GPU alone. The new wiki site will provide more details regarding setup and workflow steps!

Have something to say? Leave a comment, make yourself heard.